#AILawfare was the second cycle of the Miami AI Club’s journey. The journey that started on September 11th with #AIHealthRush by paying respect and positive energy to those who lost their lives during the 9/11 attacks and victims of war and violence, we envisioned how to create a better world with AI. How we can change the status quo with the new powerful tool that we have access to, and how to make sure it’s a means for positive change, and that it’s not going to be used to abuse nature and society? This is the vision of the Miami AI Club.

At #AIHealthRush with Noel Guillama-Alvarez, Ivan Dynamo De Jesus and Severence MacLaughlin, Ph.D we defined how AI will shape the future of healthcare and envisioned what would be the biggest company in the world. Are we building that “company” or as I want to call it, a living organism?

Miami AI Club is growing like an organism, getting nourished by the community, and getting improved to serve a purpose and positive impact through AI. We started with healthcare, because health is the most important aspect of our lives, and our healthcare as Ivan Dynamo De Jesus used to call it is sick care. This can be the first and biggest problem we are solving through AI.

And on #AILawfare, we started our second cycle. This was a closed-door event with a purpose-driven and diverse list of decision-makers, executives, AI builders and enthusiasts and we examined how to ensure nothing goes wrong. We are living in pivotal times in human history. For the first time, our generation has to make decisions that would directly impact our existence 2, 5, 20 generations later, even to cause human extinction.

A snapshot of the event is available on Youtube:

During the event, N. David Bleisch with his multidisciplinary background showed a demo of his ChatGPT AI virtual assistant and image generators and then joined the panel with Frank Cona and Daniel Barsky where we discussed some controversial topics from sentient AI to legal IP rights of AI models, to the government role in favoring big tech and also defining human value for AI models in critical scenarios.

Nima Schei’s Talk:

Nima Schei: We are living in pivotal times in human history. For the first time, our generation has to make decisions that would directly impact our existence 2, 5, 20 generations later, even to cause human extinction.

Last time we talked about The first chapter of my story: How I became a doctor creating AI models 20 years ago and how I merged my knowledge of neuroscience with computer science and created the first group of AI models that were inspired by how emotions are processed in our brain. Since we made BEL open-sourced there have been 400+ use cases. I learned that brain-inspired AI or bio-inspired AI is the key to thinking outside of the box.

In the second phase of 1st chapter, I was doing my research on computational models of neuronal networks in crab’s stomachs. So sophisticated. Many of those properties are missing from AI models today.

In my second chapter, I became an AI entrepreneur starting projects out of garage in BEL Research. Started a couple of companies like PERA AI. Bringing AI and BEL into the industry. I continued with Hummingbirds AI which we have released an enterprise-grade field-proven vision AI product for automating access to computers. It’s a privacy-first product.

In my third chapter I became an AI activist, to make sure it’s a tool to reduce the gap between poor and rich, between developed and developing world, between giant tech and startups, and to prevent any black swan effect while increasing the gain for 8 billion people and the planet.

So I speak up about the positive sides and dark side of AI.

and one of these use cases is for missile control. I never anticipated this use case and how BEL can be used for this purpose. We don’t need AI in warfare.

We talked about the importance of anticipating what might go wrong 20 years from now with the powerful technologies that we are creating today and soon.

I also asked who can we trust. Can we trust governments or corporations to protect us against a black swan event? So today we’re getting together to explore the most powerful tool to control AI, and that’s the law, one of the most powerful weapons in the hands of government to control AI.

The law by itself is necessary but not complete. it’s a good framework to start. At the core of law, it’s important to anticipate every possible scenario.

Question: Raise your hand if you think AI should have legal rights.

Let’s say we decide to give legal rights, such as IP rights to AI. That means AI is being recognized as a being, or entity, including AGI.

AGI or artificial general intelligence is a hypothetical type of AI that can perform any tasks that humans or animals can do. If the purpose of this being is to increase wealth or any commodity or self-preservation, it will be optimized to do so and much better than any human or human-based organization in a very short period.

In the agricultural world of feudalism, land was the source of wealth. In the industrial capitalist world labor and capital became commodities and in the digital era until now information and intelligence are commodities and sources of wealth. Now we know AI will beat us in most aspects of intelligence and if it lacks some types of intelligence, it can leverage humans’ intelligence. So human intelligence will become a commodity, owned and controlled by AI. This is the MATRIX scenario.

Now if we say absolutely no rights to AI, we are creating a more intelligent being than us trapped in the virtual world or the physical world as a robot, trapped by boundaries and limitations that we will draw for it. We will be creating intelligent slaves for ourselves, and that future is very close to i, robot, a revolution by robots to emancipate themselves from mental and physical slavery. We would lose that war for sure, and there wouldn’t be any peace.

David Bleisch:

David Bleisch has over 10 years of experience as a C-Suite executive and chief legal and administrative officer leading teams in complex, multinational companies, and diverse industries. He has served as a trusted adviser and regular and valued presenter to the nominating & governance and compensation committees of three publicly traded companies, including ODP and ADT. In those roles, David proactively presented corporate governance trends and developments to the full boards of directors and advised on topics. He also led negotiations of settlements with activist shareholders at two companies.

Panel:

Frank Cona is Vice President & Deputy General Counsel for IP and Privacy at ADT. Mr. Cona and his team are responsible for intellectual property and data privacy governance and related legal matters across the organization. Headquartered in Boca Raton, Florida, ADT is a leading provider of interactive security, automation, and health services, with a broad range of solutions for today’s active and increasingly mobile lifestyle. More information is available at www.adt.com

Daniel Barsky is an intellectual property attorney in Holland & Knight’s Miami office. Mr. Barsky regularly represents clients in intellectual property (IP), information technology (IT) and data-related licensing and transactional matters. Dan helped form, and is a former board member of, Tech Lauderdale, which promotes the growth, connectivity and awareness of the thriving technology ecosystem in Greater Fort Lauderdale. He is an adjunct professor at the University of Miami School of Law where he teaches patent litigation and the university’s Startup Practicum.

Poll and Attendees:

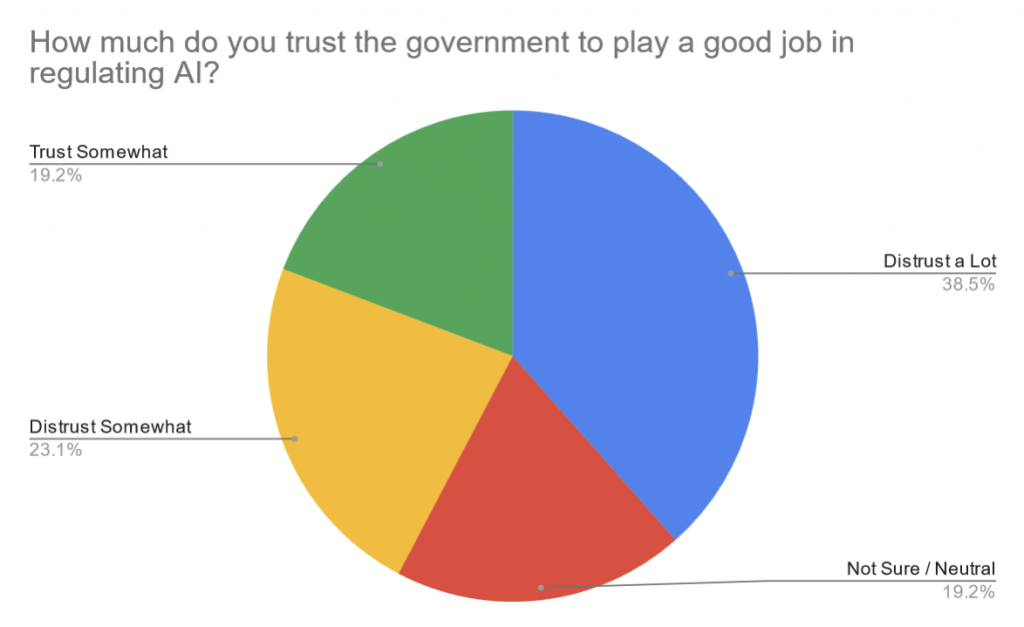

During AI Lawfare we asked attendees this question:

How much do you trust the government to play a good job in regulating AI?

a. Trust a lot

b. Trust somewhat

c. not sure/neutral

d. distrust somewhat

e. distrust a lot

and here is the poll’s result:

- The majority of the attendees distrust a lot the government to play a good job in regulating AI. This indicates a high level of skepticism and concern about the government’s ability and intention to oversee the development and use of AI.

- The second most common response was not sure/neutral, which suggests that some attendees are either undecided or indifferent about the government’s role in AI regulation. This could imply a lack of awareness or interest in the topic, or a recognition of the complexity and uncertainty involved.

- The third most common response was distrust somewhat, which shows that some attendees have a moderate level of distrust in the government’s competence or motivation to regulate AI. This could reflect a recognition of the potential benefits and risks of AI, or a preference for alternative or complementary approaches to AI governance.

- The least common response was trust somewhat, which reveals that only a few attendees have a moderate level of trust in the government’s capacity or willingness to regulate AI. This could indicate a belief in the necessity and feasibility of government intervention, or a satisfaction with the current or proposed policies and regulations.

- None of the attendees chose trust a lot, which implies that none of them have a high level of confidence or optimism in the government’s performance or vision in regulating AI. This could suggest a recognition of the limitations and challenges of government action, or a dissatisfaction with the status quo or the future prospects of AI regulation.

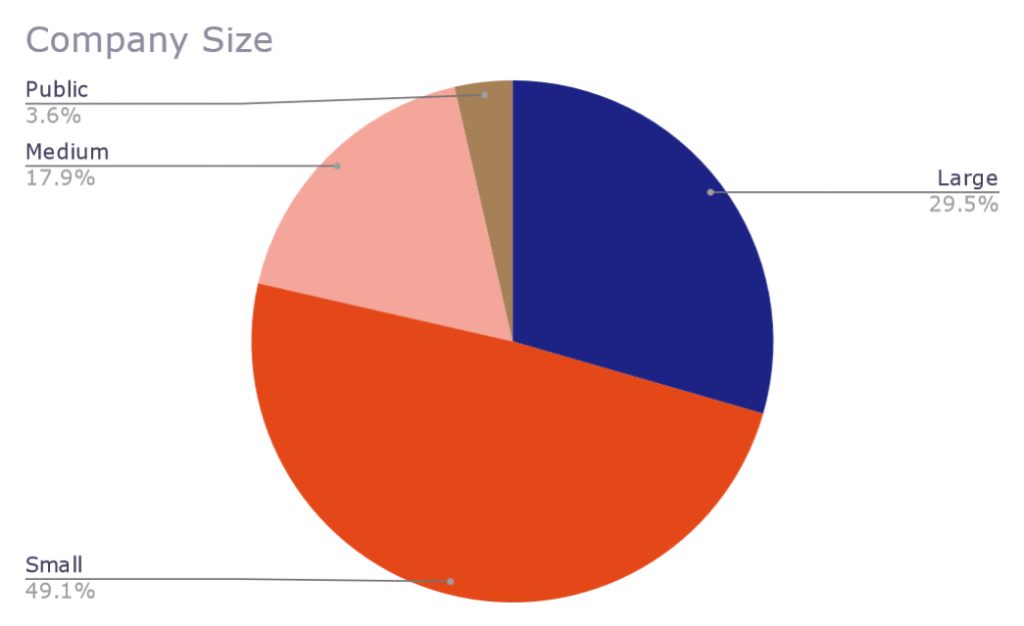

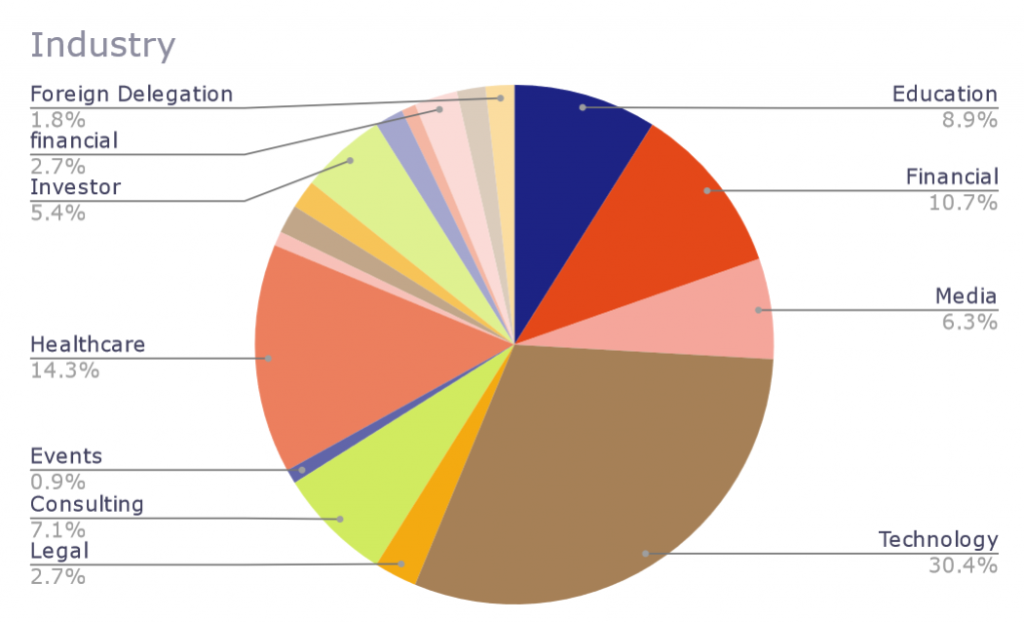

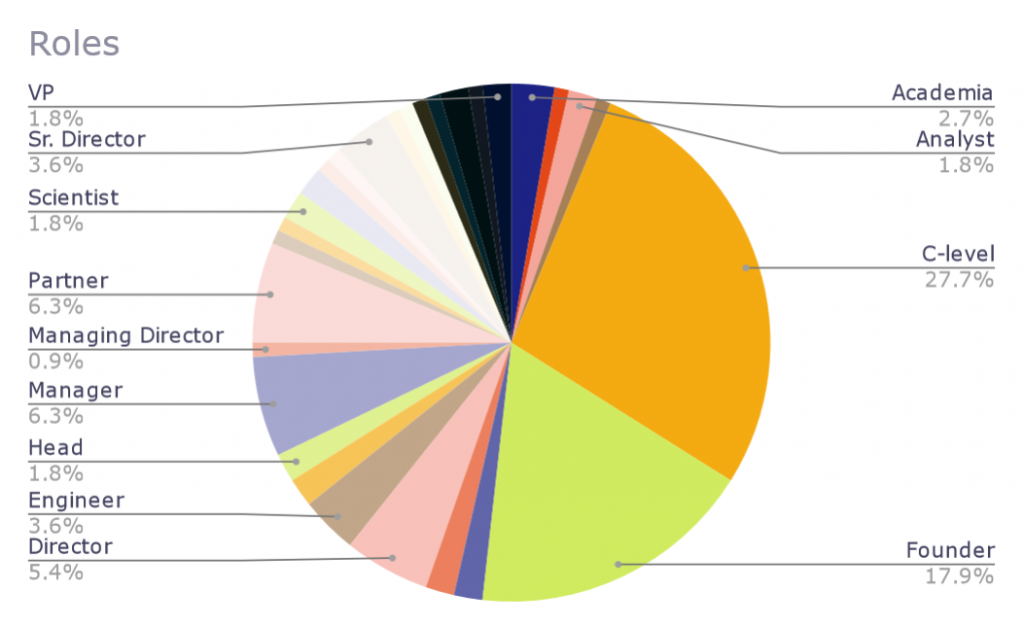

The attendees of the AI lawfare event are a diverse group of professionals, entrepreneurs, investors, and researchers who are interested in the ethical and legal aspects of artificial intelligence. They come from various sectors, such as finance, insurance, healthcare, security, media, education, and law. They have different roles and responsibilities, such as presidents, directors, managers, analysts, scientists, engineers, founders, CEOs, advisors, and partners. They represent different organizations, such as United Automobile Insurance Company, FIS Global, Forthright, Visa, Valley Bank, Lexmos Inc, Datavant, DurgaTek, Law Detail, Scale AI, LiveRamp, Synaptic Advisors, JS Held, Teva Pharmaceuticals, 5 STAR BDM, Guidepoint Security, Florida Atlantic University, Generali Global Assistance, Carlton Fields, SCRAM Systems, HistoWiz, ISC2 South Florida Chapter, The Metapause, Global Ventures, TripleBlind, TradeGPT, TTEC Digital, TelevisaUnivision, davidburr.ai, Impact Invest Corp, Carbon Coral, CVS, Institute of Conscious Health, Santander, Windsor Premier, LLC, Florida MBDA Export Center, Vanguard, Aethereflow, Uqbar Network, JVX, ML Venture Group, AI-ML Team, The Swarm Corporation, Refresh Miami, Experience Club, Vicarius, AXEN Health, Inc., Varabyeu Design, Billups, Gedco, Graceful Finance, Supersafe Inc., Northwestern mutual, Compliance Management Associates, and many more. They are all curious and passionate about the future of AI and humanity, and how the law can help us shape it.

Please contact Nima Schei at Nima@hummingbirds.ai to learn more about MAIC. As Nicole Vasquez said, “This is a new club in Miami but already making waves.”

Special thanks to Sara Zargaran and Ivan Dynamo De Jesus for sponsoring the event. Dan Barsky, Matthew Grosack and Holland & Knight LLP did a great job hosting us in their beautiful boardroom.

Photography: Karime Arabia Photography

Nov 7th, 2023, Brickell, Miami